Neuromorphic and robot computing with a sense of touch

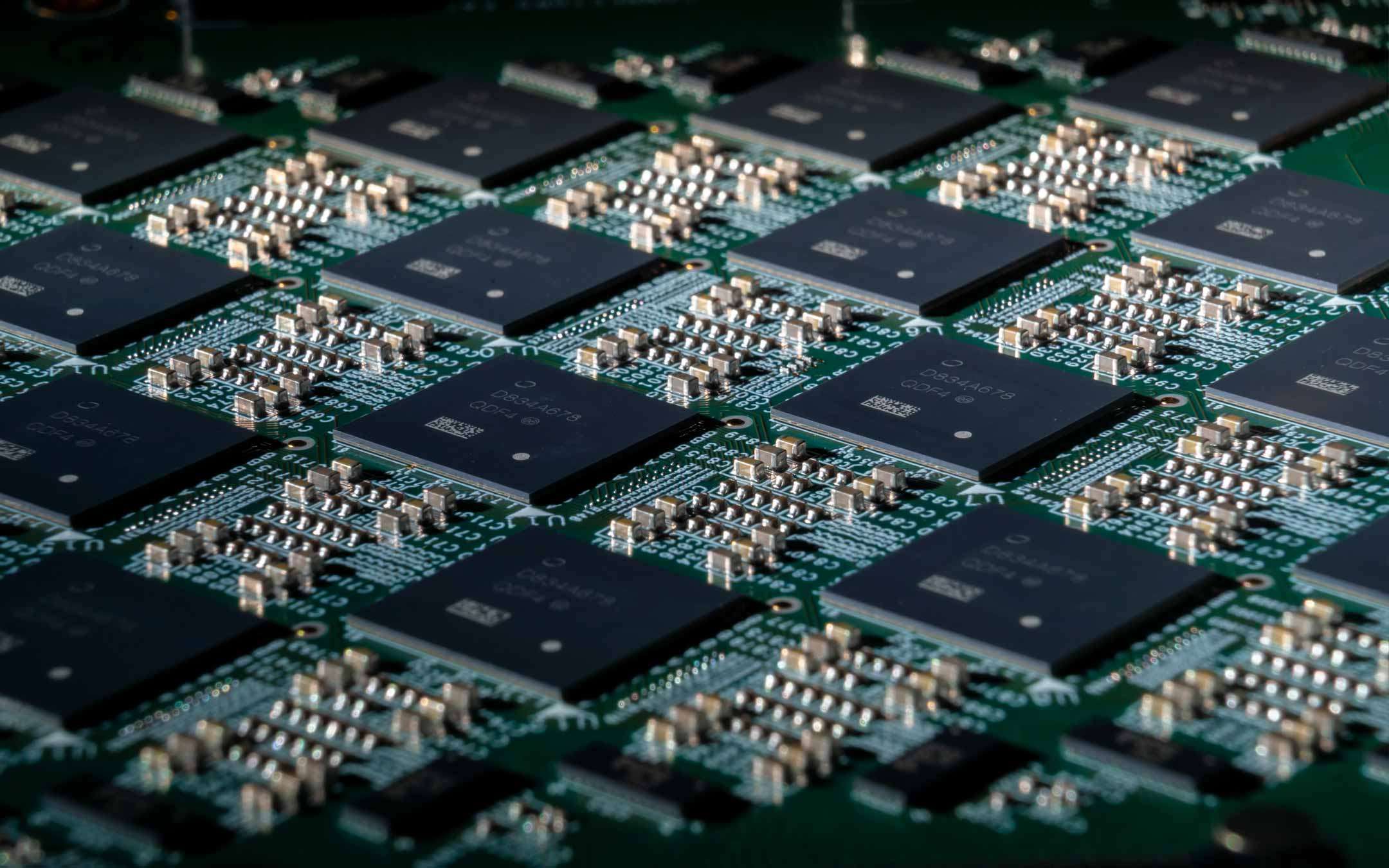

Intel Loihi: neuromorphic computing and artificial skin

An innovation of this type allows to obtain much more advanced units than the current ones, potentially able for example to grasp objects with the right pressure or to perceive the environment by improving interaction in areas such as personal care. This is the comment by Mike Davies, Director of the Intel Neuromorphic Computing Lab.This research by the National University of Singapore offers us an interesting look into the future of robotics, where information is both perceived and processed based on events combining multiple modes. This work brings new evidence of how the neuromorphic calculation allows to obtain significant improvements in terms of latency and energy consumption, when the whole system is redesigned according to an event-based paradigm that includes sensors, data formats, algorithms and architecture of the hardware.

We are talking about artificial leather, a component that necessarily requires a processor capable of drawing accurate conclusions in real time on the basis of the data collected by the sensors present on the surface, keeping consumption low.

Research is based on the use of the same Loihi chip designed by Intel and already the subject of experimentation aimed at creating a hardware device capable of recognizing odors.

... the team at NUS has further enhanced the ability of perception in robotics by combining the visual data with tactile stimuli in a neural network. To do this, they instructed a robot to classify the various containers opaque, each containing a different amount of liquid, using sensory input from the artificial skin, and a camera-based events.

The sensory information acquired are transmitted to a GPU and the chip neuromorfico to compare the processing capabilities. The chip Loihi came out head high comparison: +21% in the speed of the calculation compared to the GPU-the more recent +45% of energy consumption and +10% accuracy in the classification of objects.

Source: Intel